Convention on Certain Conventional Weapons (CCW), Meeting of Experts on Lethal Autonomous Weapons Systems (LAWS), 13 - 17 April 2015, Geneva. Statement of the ICRC

Thank you Mr Chairman.

The International Committee of the Red Cross (the ICRC) is pleased to contribute its views to this second CCW Meeting of Experts on "Lethal Autonomous Weapon Systems". The CCW, which is grounded in international humanitarian law (IHL), provides an important framework to further our understanding of the technical, legal, ethical and policy questions raised by the development and use of autonomous weapon systems in armed conflicts.

This week will provide an opportunity to build on last year's meeting to develop a clearer understanding of the defining characteristics of autonomous weapon systems and their current state of development, so as to begin to identify the best approaches to addressing the legal and ethical concerns raised by this new technology of warfare.

... the ICRC wishes to again emphasise the concerns raised by autonomous weapon systems under the principles of humanity and the dictates of public conscience. As we have previously stated, there is a sense of deep discomfort with the idea of any weapon system that places the use of force beyond human control.

We will have the opportunity to comment in more detail during the thematic sessions but at the outset we would like to highlight a few key points on which the ICRC believes attention should be focused this week.

We first wish to recall that the ICRC is not at this time calling for a ban, nor a moratorium on “autonomous weapon systems”. However, we are urging States to consider the fundamental legal and ethical issues raised by autonomy in the ‘critical functions’ of weapon systems before these weapons are further developed or deployed in armed conflicts.

We also wish to stress that our thinking about this complex subject continues to evolve as we gain a better understanding of current and potential technological capabilities, of the military purposes of autonomy in weapons, and of the resulting legal and ethical issues raised.

To ensure a focussed discussion, the ICRC believes that it will be important to have a clearer common understanding of what is the object of the discussion, and in particular of what constitutes an autonomous weapon system. Without engaging in a definition exercise, there is a need to set some boundaries for the discussion.

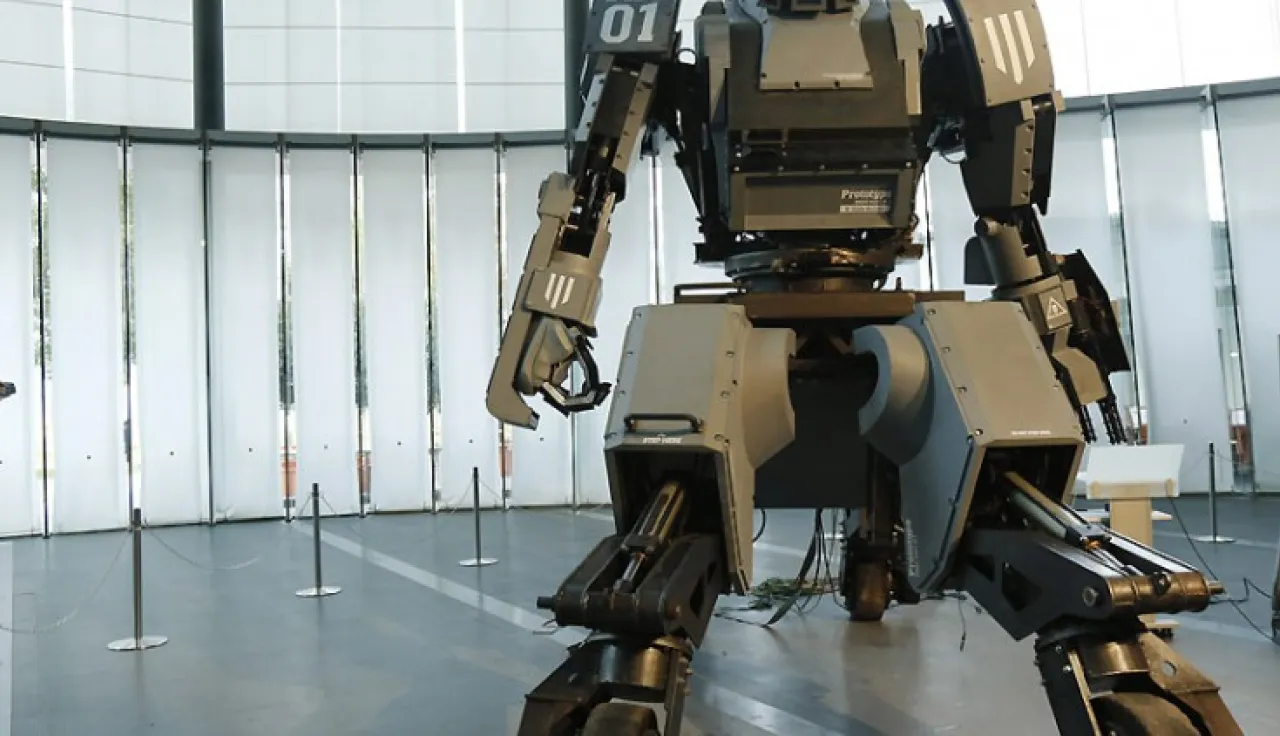

As the ICRC proposed at last year’s CCW Meeting of Experts, an autonomous weapon system is one that has autonomy in its ‘critical functions’, meaning a weapon that can select (i.e. search for or detect, identify, track) and attack (i.e. intercept, use force against, neutralise, damage or destroy) targets without human intervention. We have suggested that it would be useful to focus on how autonomy is developing in these ‘critical functions’ of weapon systems because these are the functions most relevant to ‘targeting decision-making’, and therefore to compliance with international humanitarian law, in particular its rules on distinction, proportionality and precautions in attack. Autonomy in the critical functions of selecting and attacking targets also raise significant ethical questions, notably when force is used autonomously against human targets.

The ICRC believes that it would be most helpful to ground discussions on autonomous weapon systems on current and emerging weapon systems that are pushing the boundaries of human control over the critical functions. Hypothetical scenarios about possible developments far off in the future may be inevitable when discussing a new and continuously evolving technology, but there is a risk that by focussing exclusively on such hypothetical scenarios, we will neglect autonomy in the critical functions of weapon systems that actually exist today, or that are currently in development and intended for deployment in the near future.

From what we understand, many of the existing autonomous weapon systems have autonomous ‘modes’, and therefore only operate autonomously for short periods. They also tend to be highly constrained in the tasks they are used for, the types of targets they attack, and the circumstances in which they are used. Most existing systems are also overseen in real-time by a human operator.

However, future autonomous weapon systems could have more freedom of action to determine their targets, operate outside tightly constrained spatial and temporal limits, and encounter rapidly changing circumstances. The current pace of technological change and military interest in autonomy for weapon systems lend urgency to the international community’s consideration of the legal and ethical implications of these weapons.

As the ICRC has stressed in the past, closer examination of existing and emerging autonomous weapon systems may provide useful insights regarding what level of autonomy and human control may be considered acceptable or unacceptable, and under which circumstances, from a legal and ethical standpoint. In this respect, we encourage States to share as far as possible their legal reviews of existing weapon systems with autonomy in their critical functions. This would allow for more informed deliberations.

Mr. Chairman,

Based on discussions that took place last year in the CCW and elsewhere, there appears to be broad agreement among States on the need to retain human control over the critical functions of weapon systems. States should now turn their attention to agreeing a framework for determining what makes human control of a weapon meaningful or adequate. Discussions should focus on the types of controls that are required, in which situations, and at which stages of the process – programming, deployment and/or targeting (selecting and attacking a target).

It is also not disputed that autonomous weapons intended for use in armed conflict must be capable of being used in accordance with international humanitarian law (IHL), in particular its rules of distinction, proportionality and precautions in attack. Indeed, weapons with autonomy in their critical functions are not being developed in a ‘legal vacuum’, they must comply with existing law.

Based on current and foreseeable robotics technology, it is clear that compliance with the core rules of IHL poses a formidable technological challenge, especially as weapons with autonomy in their critical functions are assigned more complex tasks and deployed in more dynamic environments than has been the case until now. Based on current and foreseeable technology, there are serious doubts about the ability of autonomous weapon systems to comply with IHL in all but the narrowest of scenarios and the simplest of environments. In this respect, it seems evident that overall human control over the selection of, and use of force against, will continue to be required.

In discussions this week, we encourage States that have deployed, or are currently developing, weapon systems with autonomy in their critical functions, to share their experience of how they are ensuring that these weapons can be used in compliance with IHL, and in particular to share the limits and conditions imposed on the use of weapons with autonomous functions, including in terms of the required level of human control. Lessons learned from the legal review of autonomy in the critical functions of existing and emerging weapon systems could help to provide a guiding framework for future discussions.

In this respect, the ICRC welcomes the wide recognition of the obligation for States to carry out legal reviews of any new technologies of warfare they are developing or acquiring, including weapons with autonomy in some or all of their critical functions. CCW Meetings of States Parties and Review Conferences have in the past recalled the importance of legal reviews of new weapons, which are a legal requirement for States party to Additional Protocol I to the Geneva Conventions. The ICRC encourages States that have not yet done so to establish weapons review mechanisms and stands ready to advise States in this regard. In this respect, States may wish to refer to the ICRC’s Guide to the Legal Review of New Weapons, Means and Methods of Warfare.

Finally Mr. Chairman, the ICRC wishes to again emphasise the concerns raised by autonomous weapon systems under the principles of humanity and the dictates of public conscience. As we have previously stated, there is a sense of deep discomfort with the idea of any weapon system that places the use of force beyond human control. In this respect, we would like this week to hear the views delegations on the following crucial question for the future of warfare, and indeed for humanity: would it be morally acceptable, and if so under what circumstances, for a machine to make life and death decisions on the battlefield without human intervention?

We will be pleased to elaborate on our views further during the thematic sessions.

Thank you.